By Mary Lee Duff, LEED AP, Associate IIDA | Senior Director of Strategy

December 11, 2018

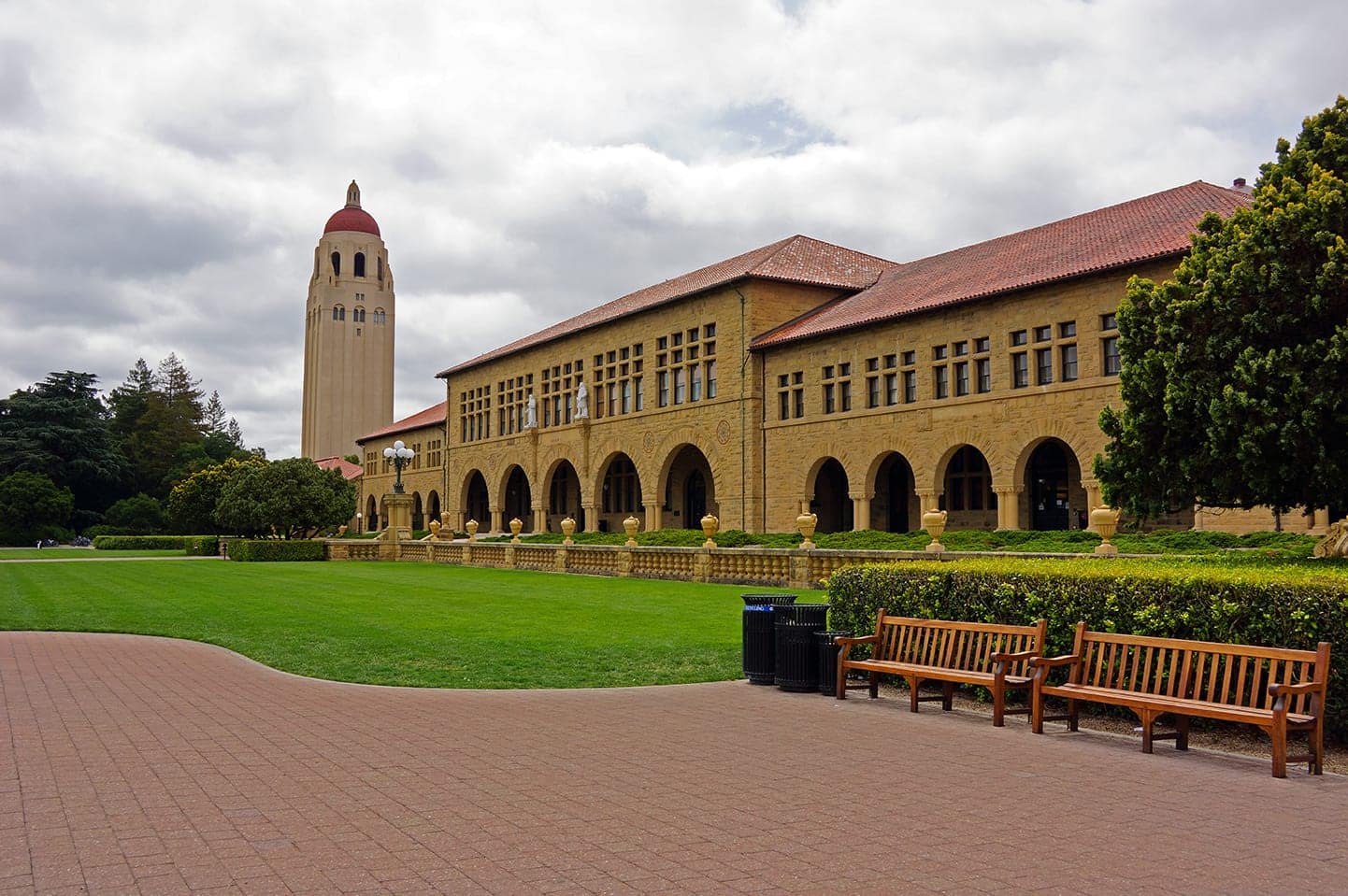

Stanford University, home of the annual mediaX conference.

Stanford University has a multi-disciplinary program called mediaX which explores topics on how technology intersects with entertainment, learning, and wellness. The goal of the program, part of the Stanford Graduate School of Education, connects across disciplines to research and innovate on how people will collaborate, communicate, and interact with information, products, and the industries of tomorrow.

A part of this program is the annual mediaX conference where speakers are invited to share topics that relate to an overall theme. The 2018 theme this past fall was entitled "AI for Culturally Relevant Interactions Forum." IA Interior Architects has been attending this annual conference for the last several years to gain insights that are relevant to our work in strategically understanding our clients’ goals, and to identify opportunities for developing highly productive and emotionally engaging workplaces. We’ve gained insights into neuroscience, human performance, and the issues around truth and transparency in the world of digital media and bots.

The topic of artificial intelligence is definitely abuzz in news and businesses and very much on the minds of design firms. In the workplace we generally think about artificial intelligence within the context of sensors and machine learning to better understand utilization and use patterns. There are numerous technology consulting companies developing systems that draw from utilization statistics and are beginning to move towards a built environment that monitors and anticipates habits, settings, and room usage. This has been a future vision which is coming closer to the reality of a room or environment that anticipates your individual needs at your specific location as well as collaboration spaces, based on data being collected on how we use, change, and occupy space types.

We are learning that there is much more to these future opportunities as well as some interesting warning signs around machine learning and AI. At the mediaX conference there were several engaging presentations that echoed concerns over the issue that many of these advances are largely being developed in WEIRD (Western, educated, industrialized, rich, and democratic) cultures, and that inherent biases will be built into the technology.

Being aware of those cultural biases and designing to be more culturally inclusive as well as ethical is a stated goal as we move forward. This was best explained by Rama Akkiraju, a Director, Distinguished Engineer, and Master Inventor at IBM’s Watson Division, where she leads the AI mission of enabling natural, personalized, and compassionate conversations between computers and humans.

In her talk she explained that data is the fuel, and the algorithm is the engine in creating an AI model. A problem statement or key objective is identified and then a series of steps are taken: data collection, data analysis, data packaging, testing and benchmarking, deployment, learning, and a continuous cycle of refining and improving. If the data or model is biased, the bias will carry through those steps. In order to mitigate the bias, one needs to evaluate where it is embedded. The source of bias could be in the data—either the training data that determines how AI learns to aggregate data, or in the test data used for evaluating the aggregated data.

This leads me to think again about our strategy process. How do we monitor our bias in how we collect data and make an unbiased analysis of our client’s needs? The machine learning model creation is very similar to the design thinking model of how we engage with a client to collect information about the client’s current state; analyze the data collected; package that information into challenges and opportunities for change; test and evaluate the design direction with benchmarking; deploy or implement the design solution; and learn about the success of the design model. We think of our process, as we do about AI, as being rational, objective, and efficient. But are we embedding our own biases into our process? That is an interesting question to ask as we develop workspaces for our diverse array of clients in very different locations around the world.

Reflections on mediaX by Mary Lee Duff of IA's workplace strategy team.